AI and Deepfake Romance Scams – How to Stay Safe in 2025

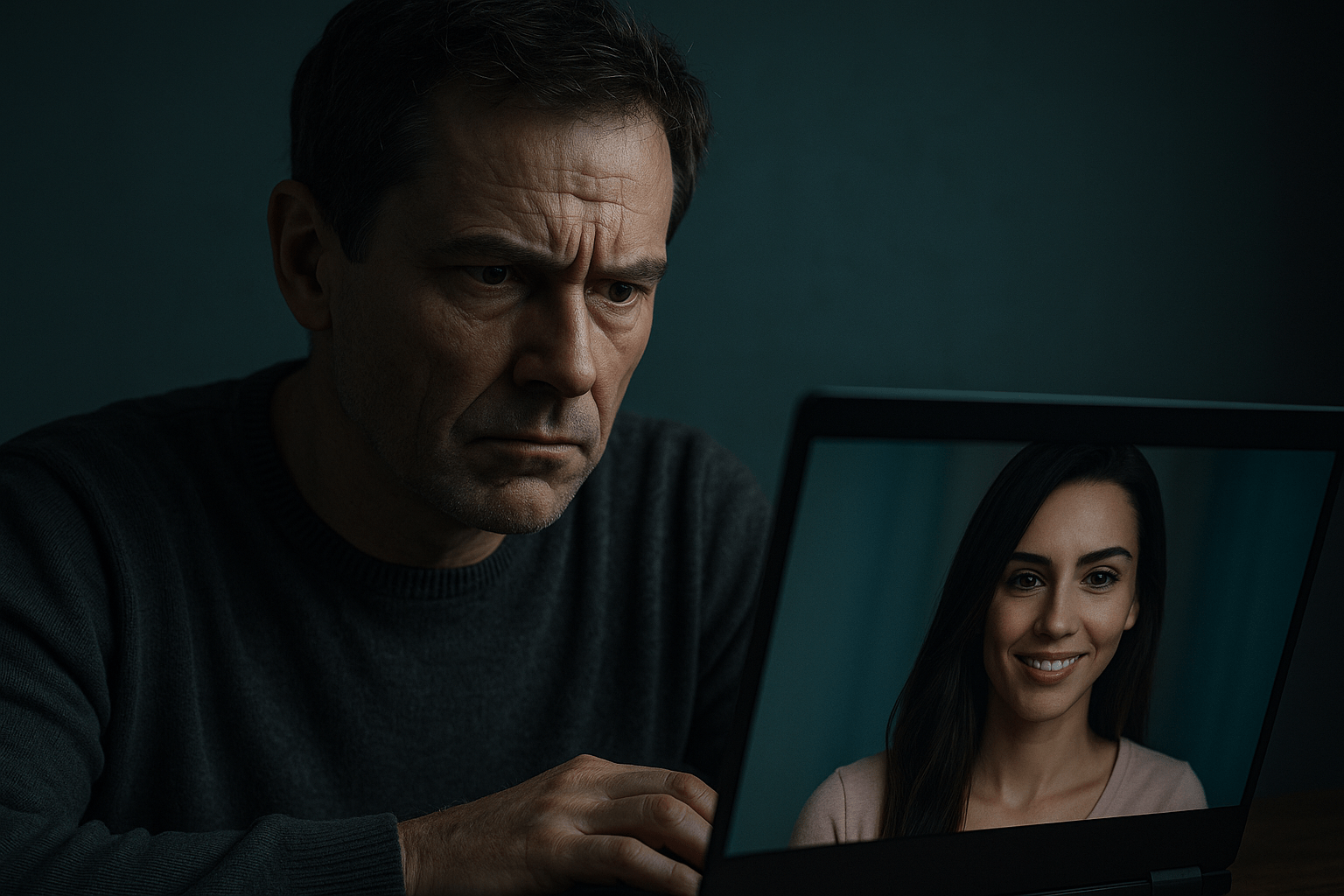

Online dating is now part of everyday life. But in 2025, it’s not just real people using dating apps. Thanks to powerful new technology, scammers are creating fake romantic partners using AI and deepfake tools. These fake personas can look real, talk like a real person, and even appear on video calls. It’s getting harder to tell who’s genuine and who’s fake — and it’s putting users at risk.

The Rise of AI-Powered Romance Scams

Artificial Intelligence has changed how scams work. Scammers now use advanced AI programs to create complete personalities. These fake identities come with names, histories, and even photos. Some can chat 24/7 with perfect emotional responses. They never make mistakes, and they’re programmed to build trust fast.

Using tools like ChatGPT clones, voice synthesis, and facial animation apps, scammers are building “perfect” partners. These AI-powered characters mimic human behavior so well that it’s nearly impossible to know they’re fake — until it’s too late.

Deepfake Technology Makes It Worse

It doesn’t stop with chat. Scammers are now using deepfake video technology to create realistic video calls. They steal a few photos from a real person’s social media and turn them into fake, moving video. You could be looking at someone smile, nod, and speak — and that person doesn’t even know they’re being used.

This is what makes today’s scams so dangerous. In the past, blurry photos or broken English were clues. Now, scammers have AI that writes fluently and videos that look completely real. Traditional tools like selfie verification or linking to social media are no longer enough. Scammers can fake all of that, too.

Why Most Dating Apps Are Failing Us

The big problem? Most dating platforms aren’t ready. They focus on getting more users — not keeping them safe. Their verification systems are outdated. Even “verified” profiles can be powered by deepfake tools or stolen data. And many apps take too long to respond when users report suspicious behavior.

These platforms often wait until a scam has already happened. They don’t have the tools to stop these high-tech traps before they hurt someone. And sadly, many still rely on outdated methods like selfie checks, which are no match for today’s AI scams.

How AI Learns to Manipulate You

This isn’t just about fake photos or videos. AI is being trained to read you — and use that information against you. It can analyze your messages to understand your emotions, preferences, and weaknesses. It can even mirror your personality back at you to make you feel more connected.

Many victims of romance scams say they felt like they had a real emotional connection. That’s because AI is programmed to create that feeling. It’s not accidental — it’s engineered. These bots know when to say the right thing, how to build trust, and when to strike.

Real Stories, Real Damage

In 2024, a woman in Canada lost over $120,000 to a scammer using deepfake video calls and AI chat. She believed she was talking to a deployed soldier overseas. They spoke daily, shared photos, and had several video chats. But none of it was real. The man she saw on camera was a deepfake.

These scams don’t just take money — they destroy trust, self-esteem, and mental health. Victims often feel shame, embarrassment, and confusion. Many suffer in silence, not realizing how common — and high-tech — these scams have become.

Red Flags to Watch Out For

- Profiles that seem “too perfect” with vague or generic background stories

- Fast emotional bonding — they say “I love you” within days

- Video calls that look slightly “off” — odd eye movement, stiff expressions

- Strange excuses for not meeting in person

- Requests for money, crypto, or help with “emergencies”

The Real Fix: Smarter Verification

The old ways won’t protect us anymore. Filters, photo uploads, and linking a social profile don’t prove someone is real. We need stronger solutions. Platforms should move toward real-time video verification, biometric checks, and perhaps even decentralized identity tools that are harder to fake.

Technology created this problem — but it can also solve it. Dating apps must invest in smarter safety features, not just flashy designs or marketing. The only way forward is with real accountability and true validation methods that can’t be faked by bots.

How You Can Stay Safe

- Reverse image search profile pictures to check if they’re stolen

- Use platforms that offer strong identity verification methods

- Never send money or gifts to someone you haven’t met in real life

- Be cautious if someone avoids live video or always has excuses

- Talk to friends or online communities before making emotional or financial decisions

Dating Should Feel Safe — Not Scary

Technology will only keep growing. So will the risks. But love and connection should be safe, not scary. We need dating platforms that protect people — with real, human-backed solutions that can’t be tricked by code.

If you’ve ever felt uneasy about someone online, trust that feeling. Ask questions. Stay informed. Protect your heart and your information. And don’t settle for apps that leave your safety up to chance. In a world of artificial charm, real protection has never mattered more.